A HISTORY OF ARTIFICIAL INTELLIGENCE IN 10 LANDMARKS

WHY IT MATTERS TO YOU

AI is an extraordinarily important and complex field. We've done our best to narrow down the 10 milestones in its history you should know.

Compressing all of artificial intelligence (AI) into 10 “moments to remember” isn’t easy. With hundreds of research labs and thousands of computer scientists, compiling a list of every landmark achievement would be, well, a job for a smart algorithm to handle.

With that proviso taken care of, however, we’ve scoured the history books to bring you what we think are the top 10 most significant milestones in the history of AI. Check them out below.

THE BIRTH OF NEURAL NETWORKS

Warren McCulloch and Walter Pitts’ “A Logical Calculus of the Ideas Immanent in Nervous Activity” might sound like a mouthful, but it’s as important to computer science as (if not more than!) “The PageRank Citation Ranking,” a.k.a. the research paper which spawned Google. In “A Logical Calculus,” McCulloch and Pitts describe how networks of artificial neurons can be made to perform logical functions. The dream of AI is born!

ARTIFICIAL INTELLIGENCE GETS ITS NAME

The conference takes place the following year at the 269-acre estate of Dartmouth College. Unfortunately, their timeline turns out to be a bit too optimistic. “We think a significant advance can be made … if a carefully selected group of scientists work on it for a summer,” they write. Things take a bit longer than that.

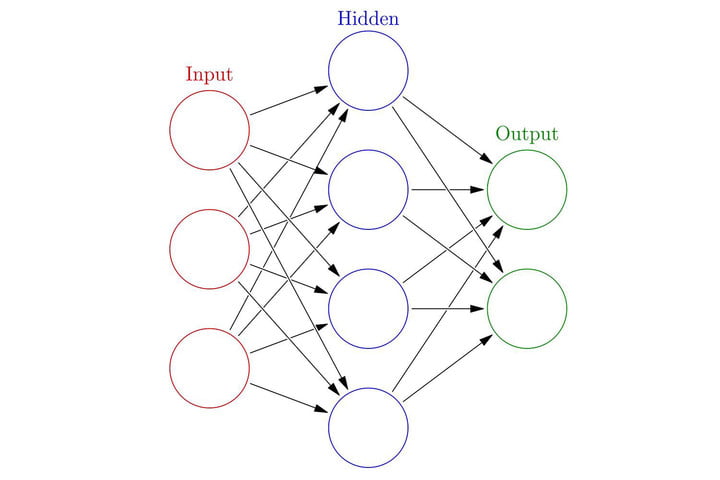

THE ARRIVAL OF ‘BACKPROP’

What backpropagation does is to allow a neural network to adjust its hidden layers in the event that the output it comes up doesn’t match the one its creator is hoping for. In short, it means that creators can train their networks to perform better by correcting them when they make mistakes. When this is done, backprop modifies the different connections in the neural network to make sure it gets the answer right the next time it faces the same problem.

CONVERSING WITH COMPUTERS

Its creator noted at the time how surprised they were that users were so willing to converse with a machine in this way.

THE SINGULARITY

Called “The Coming Technological Singularity,” Vinge predicted that, within the next 30 years, humankind would have the ability to create superhuman intelligence. “Shortly after, the human era will be ended,” he wrote. It’s a warning that others like Elon Musk have reiterated in the years since.

HERE COME THE SELF-DRIVING CARS

A few years later, a Carnegie Mellon researcher named Dean Pomerleau built an autonomous Pontiac Transport minivan and used this to drive 2,797 miles coast to coast from Pittsburgh, PA to San Diego, CA. The tech was primitive by today’s standards, but demonstrated that it could be done.

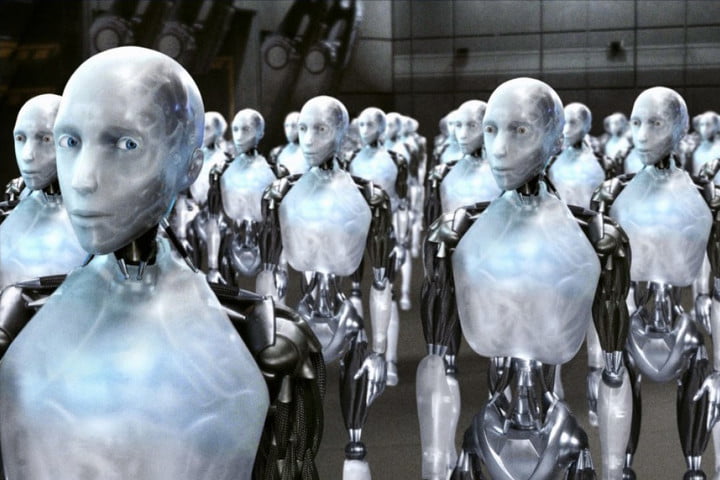

“THE BRAIN’S LAST STAND”

The results may not have shown AI to be capable of anything more than working exceptionally well at problems with clearly defined rules, it was still a massive leap forward for artificial intelligence as a field.

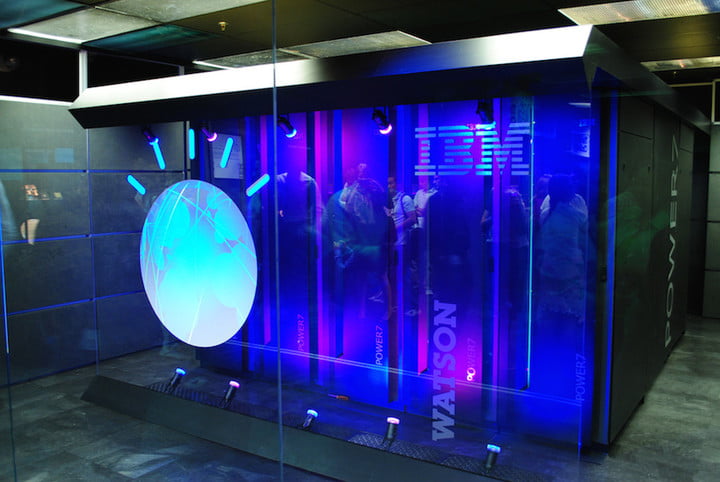

AI TRIUMPHS AT JEOPARDY!

Much like Deep Blue’s standoff with Garry Kasparov, IBM’s AI faced another big challenge in 2011 when its Watson AI took on former Jeopardy! winners Brad Rutter and Ken Jennings at their game show of choice — and won the $1 million first place. After the bout, a crushed Ken Jennings quipped that, “I, for one, welcome our new robot overlords.”

AI LOVES… CATS?

It turns out that, just like us, even impressively smart AI enjoys cat videos.

AI BEATS THE GO WORLD CHAMPION

by Luke Dormehl